Published October 7th, 2025 // By: DigitalMint Cyber

AI Driven Cyber Threats in 2025 and How to Defend Against Them

DigitalMint Cyber original blog post

Cybersecurity Awareness Month 2025

artificial intelligence

Stay Safe Online

Artificial Intelligence (AI) is transforming our digital world, offering powerful tools for productivity, personalization, and automation. But the same technology is also being weaponized by cybercriminals to launch smarter, dynamic, and more convincing attacks at an alarming rate.

From AI-generated phishing emails and deepfake scams to adaptive malware, threats are rapidly evolving–becoming more sophisticated, scalable, and harder to detect than ever before.

AI is reshaping the cybersecurity landscape. Whether you’re an individual, a small business owner, or leading a team, now is the time to rethink your security strategy through the lens of AI.

This post breaks down what’s changed, what you and your small team should watch out for, and outlines smart practical strategies that you can adopt to strengthen your defenses.

Common AI-Driven Threats Targeting Individuals in 2025

AI has significantly advanced social engineering tactics by enabling attackers to generate highly realistic and personalized phishing messages. Rather than relying on generic, poorly written messages, attackers use AI to continuously learn from targets, adapt messaging strategies, and scale their attacks with speed and precision.

Deepfake impersonation & voice cloning: Scammers are using AI to clone voices and faces, impersonating family, friends, or coworkers to ask for urgent money transfers or personal data.

Hyper-Personalized Phishing Attacks: Unlike traditional scam emails, AI can now generate grammatically flawless, high-quality personalized messages that appear legitimate. These can arrive via email, text, or even social media.

Fake AI Chatbots: Scammers use malicious chatbots or fake websites to pretend to be customer support or government services and trick users into giving away information.

AI-Supported Social Engineering: AI can scrape your public data and analyze your online behavior, likes, and social media posts within minutes to craft messages that feel personally relevant and believable.

Adaptive Malware: Attackers leverage AI to adapt malware and ransomware on the fly, bypassing traditional detection tools and maximizing damage.

Best Practices to Protect Yourself From AI-Powered Attacks

Strengthen Your Digital Hygiene: Follow essential habits from this blog post to build strong cyber hygiene habits into everyday practices.

Stay Skeptical by Default: Be cautious of unexpected requests, even if they appear to come from people you know. Always call or initiate a video chat through trusted sources to verify requests.

Stay Informed to Recognize Signs of AI Manipulation: Learn to spot deepfakes by looking out for unnatural facial expressions, irregular eye movements or blinking in videos, awkward pauses in voice messages, audio that lags or doesn’t sync properly, or perfectly-written messages with unusual urgency.

Minimize Your Digital Footprint: Limit the personal information you share online such as your birthday, location, job details, and contacts. This data is digital gold for AI-driven scams.

Never Input Confidential Information into AI Tools: Avoid entering sensitive information such as passwords, personally identifiable information (PII), financial data, legal documents, or anything marked internal or confidential into AI platforms.

Check AI Tool Settings: Many AI tools allow users to toggle settings around data use. Disable data sharing and training where possible to protect your input.

AI-Driven Threats Targeting Small Businesses & Teams

AI is lowering the barrier to entry for cybercriminals. They no longer need to be expert hackers, they just need access to the right tools. As a result, small businesses are increasingly vulnerable to AI-powered cyber attacks.

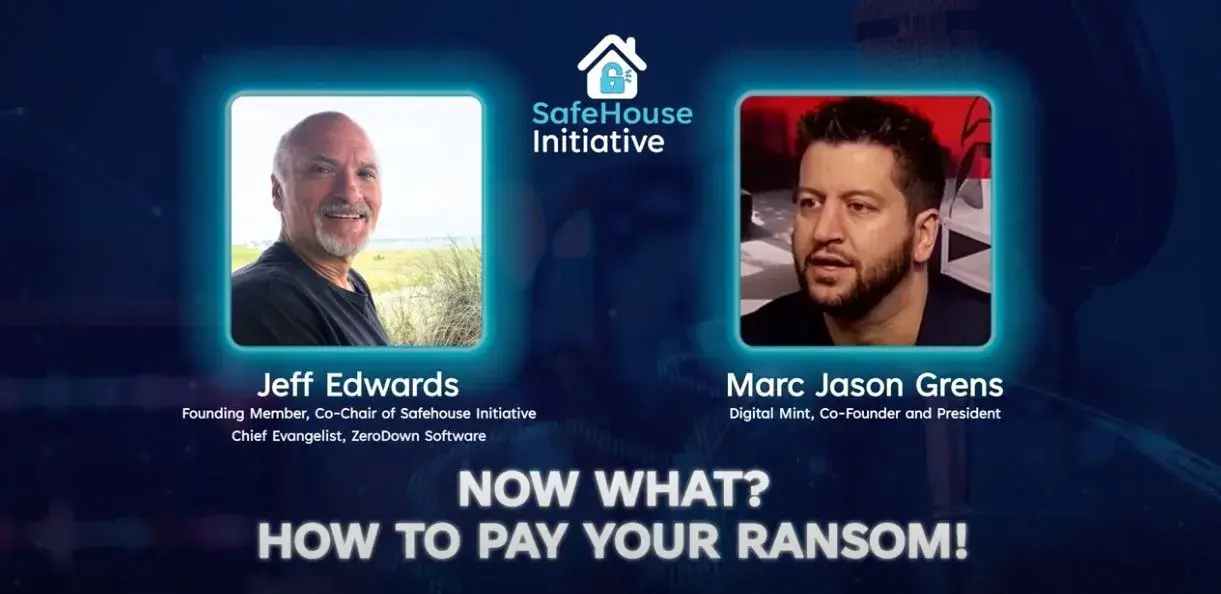

Business Email Compromise (BEC) 2.0: AI tools are now used to clone writing styles and craft realistic emails that appear to come from company executives and leadership, tricking employees into transferring money or sharing credentials.

Automated Reconnaissance: AI bots and tools can scan your entire digital presence, parse team profiles, and assemble attack vectors to identify vulnerabilities within minutes.

AI-Assisted Malware and Ransomware: Malware can now autonomously adapt to its environment, altering its behavior to evade traditional antivirus tools and selectively target high-value data.

AI Exploits and Model Manipulation: Hackers are even attacking the AI tools themselves by poisoning data sets, exploiting APIs, and manipulating models to gain access.

Generative AI Security Risks: Large Language Models (LLMs) are used to automate the creation of malicious code, fake identities, and misinformation, multiplying threats at scale.

Best Practices & Solutions for Defending Your Business

Implement Zero Trust Default!: Never assume internal traffic is safe. Enforce strict role-based access controls (RBAC), Multi-Factor Authentication (MFA), and robust authentication protocols for all users and devices.

Train Your Team Regularly: Invest in ongoing security training, especially focusing on new AI-driven threats like deepfake scams and phishing simulations.

Deploy AI-Powered Security Solutions: Deploy advanced security tools like EDR, SIEM, and SOAR that leverage AI-driven behavioral analytics and anomaly detection to identify and respond to advanced threats, including those potentially orchestrated by AI.

Secure All Endpoints and Cloud Services: Monitor employee devices and SaaS platforms regularly. Ensure remote workers follow the same security standards as in-office teams.

Secure Your Supply Chain: Your security is only as strong as your weakest link. Ensure vendors and contractors adhere to strong cybersecurity standards and best practices.

Create and Test Incident Response Plans: Plan for worst-case scenarios by developing and regularly testing response plans for ransomware, data breaches, and impersonation attacks.

Build Strong Governance from Day One: Define and implement strict internal AI usage policies and governance practices. Align them with established frameworks such as the NIST AI Risk Management Framework and enable continuous monitoring and real-time auditing for effectiveness.